Integration-testing with py.test

Writing some Tahoe-LAFS “magic-folder” tests

I really, really like py.test and the nice, clean composable style of @fixture s. Recently, I re-worked some integration style tests for the “magic-folder” feature of Tahoe-LAFS.

During development of Magic Folders I created a script that set up a local Tahoe grid along with an Alice and Bob client (with paired magic-folders). This script then did some simple integration-style tests of the feature. You can gaze into the abyss directly – not great!

Doing proper end-to-end tests of this is relatively hard; you need a bunch of co-operating processes (an Introducer, some Storage nodes, and two client nodes) with mutual dependencies.

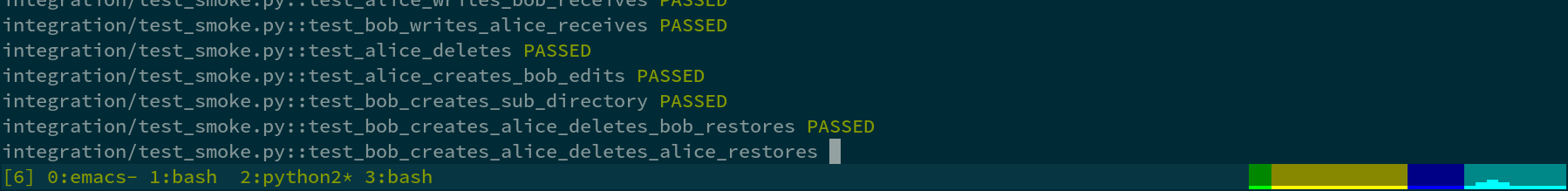

So using that as a basis, I turned this into “real” integration tests using py.test:

- run a log-gathering service to collect all logs;

- fixtures to set up the various processes;

- everything runs in clean tempdirs;

- proper tear-down;

- real assertions for tests.

The Actual Tests

Lets look at the best part first, which is the actual tests. In the end, all the tests are really nice and clean (see them all in integration/test_magic_folder.py) for example.

def test_bob_writes_alice_receives(magic_folder)

alice_dir, bob_dir = magic_folder

with open(join(bob_dir, "second_file"), "w") as f:

f.write("bob wrote this")

util.await_file_contents(join(alice_dir, "second_file"), "bob wrote this")

return

This lets us push all the complexity and clutter of “how do I set up a magic-folder” into the fixtures – and any test that is doing things with one just has to declare a magic_folder argument. The test then becomes pretty clear: Bob writes a file to his magic-folder, and we wait to ensure that it appears in Alice’s magic-folder.

So, this is really nice!

Granular Setup

Okay, but what about that “complexity and clutter”?

In “traditional” test-runners like the stdlib unittest module, a test-suite has a single setUp method where all the setup happens. You can of course choose to instantiate helpers and the like in these methods, but in my experience what tends to happen is that “everything” to do with setup is done in the one method.

What can be even worse is when inheritance is used (either via mix-ins or in a “traditional” hierarchy) since it becomes less obvious which tests need what setup. In either case, most state is then stored in self. which also becomes untenable and confusing. That is, answering the questions “where does self.foo come from?” or “does this test need self.foo?” becomes harder and harder.

So, given that you get inter-fixture dependencies with py.test this situation can be drastically improved. Specifically, I have a setup like this:

- some “utility” setup and teardown (tempdirs, etc);

- a

flogtool gatherservice to collect Foolscap logs; - an Introducer (depends on logging service);

- five storage nodes (depend on Introducer and logger);

- Alice and Bob clients (depend on Introducer, Storage).

We can declare these dependencies easily in the fixture declarations themselves. Reproducing just the definitions, we can easily see the dependencies:

@pytest.fixture(scope='session')

def flog_gatherer(reactor, temp_dir, flog_binary, request):

# ...

@pytest.fixture(scope='session')

def introducer(reactor, temp_dir, tahoe_binary, flog_gatherer, request):

# ...

@pytest.fixture(scope='session')

def storage_nodes(reactor, temp_dir, tahoe_binary, introducer, introducer_furl, flog_gatherer, request):

# ...

This gives many advantages! Each fixture does just one thing, so you can ignore irrelevant or “obvious” things (e.g. temp_dir). It’s easier to compose them together. You can now more-easily re-use them (for example, a future test might just need an Introducer). You also are Strongly Encouraged by py.test infrastructure to put finalizer code “right near” the setup code, which is also very nice.

Obviously it’s still not magic, and this setup is still pretty complex. However, the declarative nature of the fixture inter-dependencies really helps a reader (I think!) see how things are related. The granularity also allows you to quickly find interesting things (e.g. “I wonder how the storage nodes get set up?”)

When Things Go Bad

When tests fail, you’re sad. But then what? I added a --keep-tempdir option (thanks to py.test ‘s very extensive customization hooks) so that you can keep the entire setup of the grid. As well, all the logs gathered are dumped to a tempfile (that’s also retained).

Combined with passing -s to py.test, you get a complete postmortem of everything: you get all stdout from all the processes, and all the Foolscap logging. You can also now re-start any or all of the local grid (including the log-collector). This is usually a bit step up from any manual tests (the log-collector is usually missing then, for example), and of course is far more repeatable.

Gnits and Gotchas

The only thing I don’t like about this setup is that the magic_folder fixture takes some non-trivial amount of time to instantiate (because it’s making a logger, introducer, 5 storage nodes, etc) and there’s no way to show progress to the user.

What I did is to make some “dummy” tests (in test_aaa_aardvark) that run first, and declare dependencies on just some of the simpler fixtures (in order). So, you see a test_introducer run as soon as the Introducer is set up, etc. In this manner you see some amount of progress (but, you can safely skip any of these).

It would be nice if py.test had a built-in way to show progress of fixtures getting instantiated especially for integration-style tests.

txtorcon

txtorcon carml

carml  cuv’ner

cuv’ner